Things that are different should be handled differently. Right?

Wrong—at least if you’re a neuron. In the outer cortex of the brain, different sensory inputs are sometimes treated as if they’re the same.

That’s the secret (and the flaw) of what I call the Almost Gate—a neural shortcut baked into the architecture of cognition. It emerges from the physical properties of neurons themselves.

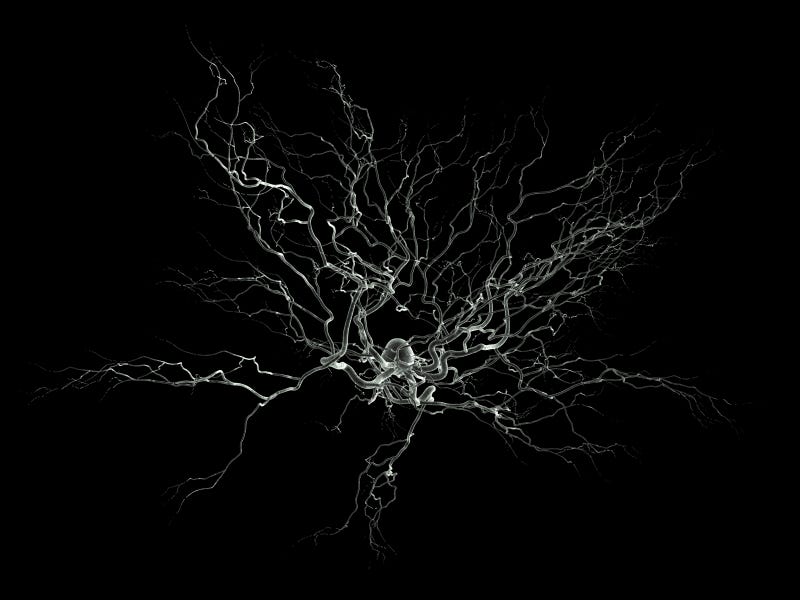

So what does a neuron actually look like?

The image below shows a typical cortical neuron. Most of its inputs arrive through the branching dendrites above the cell body—a bulbous, mushroom-cap shape that sits just above the bottom quarter of the image. If enough of those inputs exceed a certain threshold, the neuron fires, sending a signal down the axon that extends below.

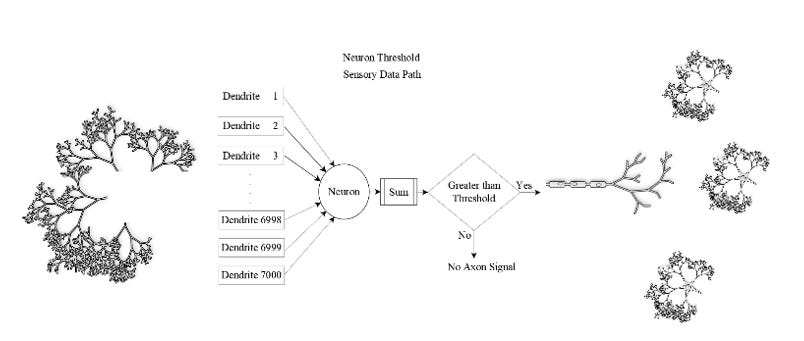

Path from Sensory Data to Signal

Let’s discuss (as shown in the schema below) the signal path out a sensory neuron in the human cortex. That neuron receives potentially a large number (up to 1000s) of sensory inputs at its dendrites. In the cell body, if the aggregate of the electronic potential incoming is greater than the neuron’s threshold value, the neuron body initiates an electronic signal down its axon, along which it will reach synaptic gaps of many (1000s) neurons.

Sensory Data Neural Actions Axon Receiving Neurons

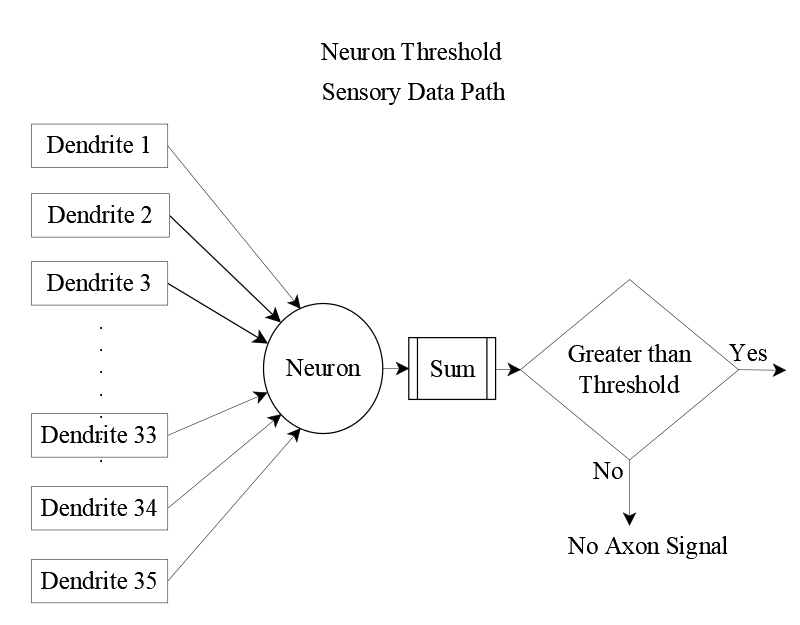

Neural Threshold and the Almost Gate

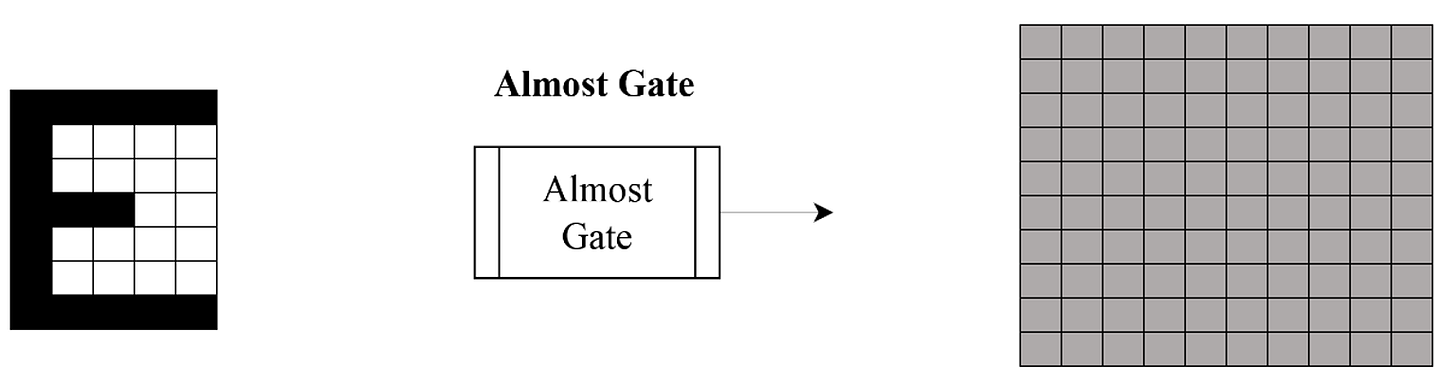

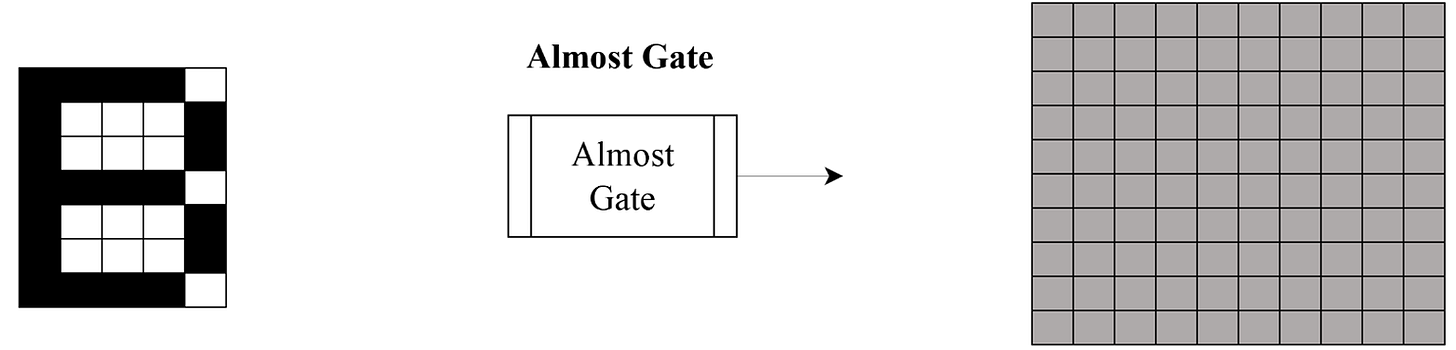

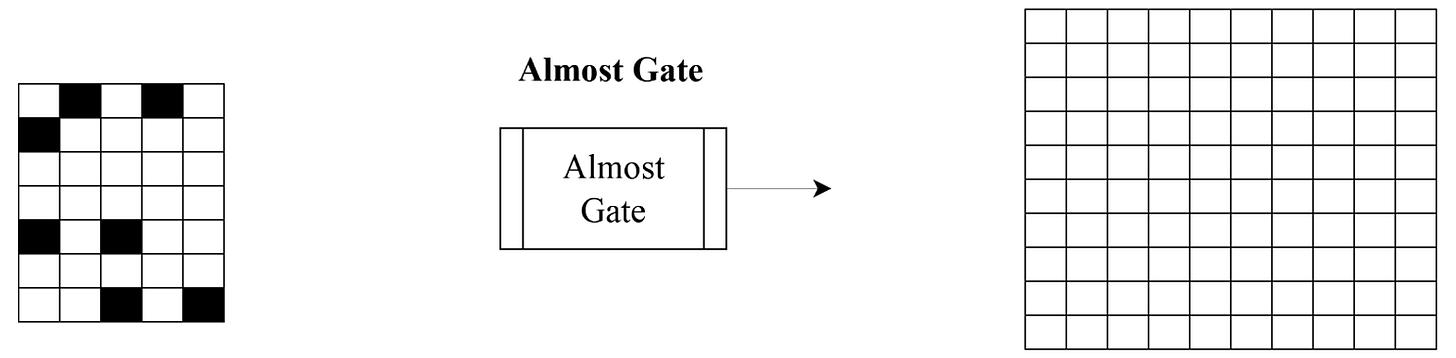

Let’s keep the core elements in place but simplify the system to make the concept more tangible. In the diagram below, the Almost Gate receives 35 inputs (arranged in a 5×7 grid) and connects to 100 outputs (a 10×10 grid). These numbers aren’t arbitrary—they’re chosen to make the examples easier to follow.

Here’s how it works:

When the 35 sensory data points reach the neuron’s dendritic projections, each carries a small electrical value. These values accumulate in the neuron’s cell body. If their total exceeds the neuron’s threshold, the neuron fires—sending a signal down its axon. If the total falls short, nothing happens.

This is the essence of the Almost Gate. It doesn’t care which inputs were active—only whether the total surpassed the threshold. That means all combinations of sensory data that exceed the threshold are treated identically. They all trigger the same axon signal. In that moment, the neuron doesn’t distinguish between them. It simply says: close enough.

They’ve passed the Almost Gate.

(In real neurons, it’s not just the number of inputs that matters—it’s also when they arrive. Inputs that cluster tightly in time are more likely to push the neuron past its threshold. But for now, we’ll focus on the simplified version: total input strength.)

Now, two cases matter:

Case 1: The inputs exceed the threshold → a signal is sent.

Case 2: The inputs fall short → no signal is sent.

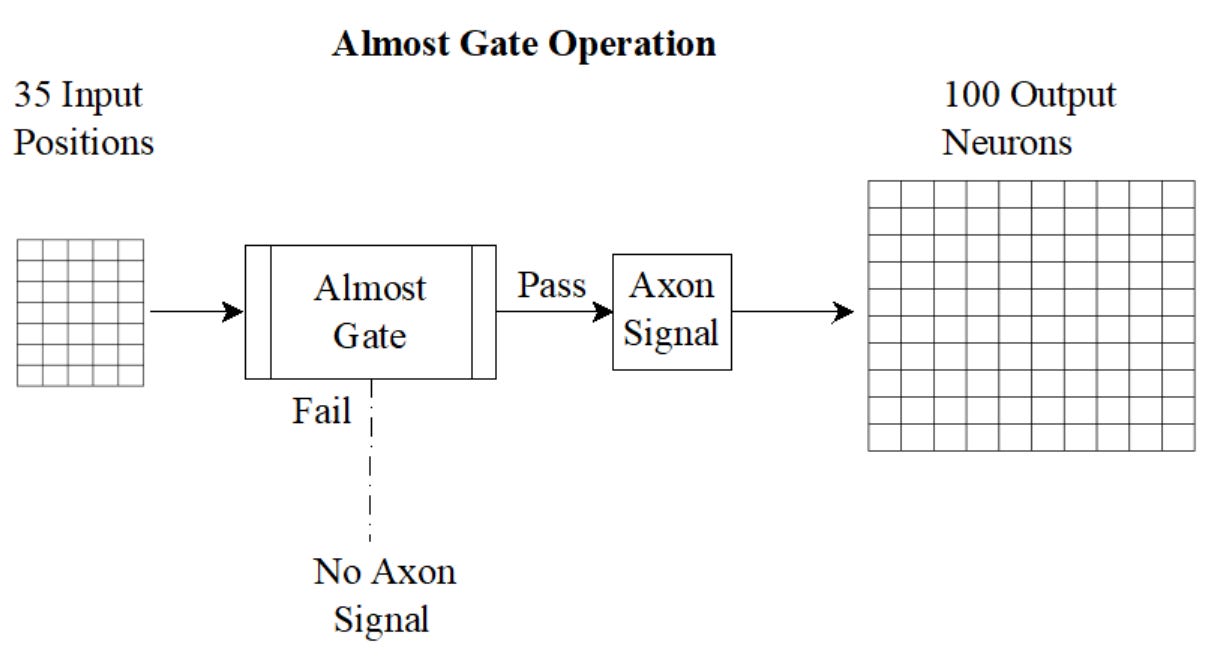

To streamline the diagram (below), we’ve replaced the internal summing process with a symbolic Almost Gate. On the left, the 5×7 input grid. On the right, the 10×10 output grid. In between, the gate that decides: pass or block.

This behavior reflects the All-or-None Law of neural firing. A neuron either fires at full strength or not at all. Increasing the intensity or duration of the input doesn’t change the amplitude of the signal—only whether it happens.

Examples

Let’s focus on the input and resulting output of the Almost Gate.

Case 1: Threshold Exceeded

Example 1

The arrangement of active inputs (shown in black) forms a recognizable letter E. These 35 inputs exceed the neuron’s threshold, so the axon fires.

The output array is uniformly gray. This indicates that the same signal is sent to all connected neurons. The specific shape of the input doesn’t matter. Once the threshold is crossed, the output is identical.

This time, the active inputs form the letter B. Like the previous example, the total input exceeds the neuron’s threshold, so the axon fires.

The output—again shown as a uniform gray 10×10 grid—is exactly the same as before. The neuron doesn’t care that the input was a B instead of an E. It only cares that the threshold was crossed.

The result? Different inputs, same output.

In this case, the input is sparse—too few active signals to cross the neuron’s threshold. As a result, the axon remains silent.

The output grid below reflects this: all 100 receiving neurons show no activity. No signal was sent. No response was triggered.

This is the other side of the Almost Gate: Not enough → nothing happens.

Musing

But wait a second—we do recognize the difference between the letters E and B.

That’s because we don’t think with a single neuron. Our brains are layered systems. Each neuron acts as an Almost Gate, but together, they form intricate maps—networks that detect patterns, refine distinctions, and build meaning.

Although not essential to understanding the Almost Gate itself, it’s worth noting how neurons communicate. The signal sent down the axon doesn’t directly “plug into” the next neuron. Instead, it reaches a synaptic gap—a tiny space between the axon terminal and the receiving dendrite. There, a chemical process takes over. Neurotransmitters carry the signal across the gap, and this transmission is partial, learned, and shaped by experience.

In other words, even though the output of the Almost Gate is binary, what happens next is anything but.

Beyond the Senses

This post focused on sensory input to keep things concrete and relatable. But sensory neurons make up only about 0.1% of the brain’s total—just a sliver of the neural landscape. The real action lies deeper: in memory, learning, reasoning, and emotion. Cognition isn’t about the world as it is—but the world as it becomes within us.

The Almost Gate isn’t just for seeing or hearing. It’s for exploring how the mind makes sense of what it lets in.

For those inclined to explore further, neural network simulations in MATLAB, TensorFlow, and PyTorch offer hands-on ways to experiment with these ideas. A closer look at Kohonen networks and Self-Organizing Maps (SOMs) will be especially relevant to what comes next.

Coming Up: Layers of Neurons to SOM

In a coming post, we’ll explore how layers of Almost Gates—stacked and shaped by experience—give rise to Self-Organizing Maps. These maps don’t just process input. They structure it, revealing how the brain builds meaning from raw data.